Model synchronization from dbt to Metabase and vice-versa

Global documentation with local input

In theory, the proper documentation of BI processes is as fundamental as the treatment of the data itself. However, in practice, the former is often overlooked because the priority is heavily placed on delivering results in the form of reports and dashboards. This is a common misconception, as a rich documentation can save time (and money) in future processes. In this article, we highlight the importance of the documentation of data architecture in business intelligence and also introduce a solution for model synchronization from dbt to Metabase which facilitates access to table relationships, model and column descriptions, semantic types, and exposures.

“The broader the BI stack becomes, the more difficult it gets to keep track and to document the nuances along the pipeline process”

The importance of data architecture documentation in business intelligence

In short, the appropriate documentation of data architecture is important in business intelligence because it helps to ensure that the data being analyzed is accurate and reliable. This is essential for making informed business decisions based on the data. Therefore, having a clear understanding of the data structure allows users to know how the data is organized and how it can be accessed and used. This includes information about the data sources, the data model, and the relationships between different data elements.

Moreover, proper documentation helps to improve the maintainability of the BI system over time. It allows new users to quickly understand the data and how it is used. Furthermore, it helps to ensure that changes to the data structure are properly documented and communicated to all relevant stakeholders.

The challenges

The broader the BI stack becomes, the more difficult it gets to keep track and to document the nuances along the pipeline process. This requires repetitive documentation in every step of the process, which ultimately compromises the quality of the documentation, or even its creation as a whole. Additionally, the pressure put on data analysts to produce results within tight deadlines often depletes all capacities again deprioritizing the documentation process.

The solutions

While the focus of current and new data tools has been put almost entirely on data processes rather than its documentation, it is no surprise that the latter is often overlooked. Fortunately, this is changing as more recent tools such as dbt (Data Build Tool) facilitates the creation and visualization of documentation.

dbt stand alone documentation

dbt docs generate is a command in the dbt CLI (command-line interface) that generates documentation for your dbt project. In dbt cloud generating this documentation is equally straightforward (https://docs.getdbt.com/docs/collaborate/build-and-view-your-docs). This documentation includes information about your project’s models, tests, and seed files, as well as the relationships between these elements.

When you run the dbt core docs generate command, dbt will create a docs/ directory in your project, and within that directory, it will create Markdown files for each model, test, and seed file in your project. These Markdown files contain information about the SQL code in each file, as well as any comments or documentation you have written in your dbt project.

dbt core docs serve is another command in the dbt CLI that allows you to view the documentation that has been generated by the dbt core docs generate command. In dbt cloud your documentation is made available within the UI itself. When you run dbt core docs serve, dbt will start a local web server that serves the documentation for your project. You can then view the documentation by visiting the URL provided by dbt in your web browser.

Both the dbt core docs generate and dbt core docs serve commands are useful for creating and viewing documentation for your dbt project, which can help you understand your project’s structure and how it is built. They can also be helpful for others who are working on your project, as they provide a clear overview of the project’s components and how they fit together.

“The dbt-metabase package solves this problem by propagating table relationships, model and column descriptions as well as semantic types (e.g. currency, category, URL) from dbt to Metabase data models. ”

Metabase documentation

Metabase, a cloud-based data visualization tools with good self-service BI capabilities, offers a ‘data reference’ section where a collection of pages is organized by database, then tables in that database, and it is where information about tables and data types of columns can be found. Of note, each table or column will only contain a description if your admin wrote something in the Data Model section of the Admin Panel.

The optimal solution: dbt-metabase synchronization

As mentioned above, both dbt and Metabase allow for the insertion of tables’ and columns’ descriptions as well as information about the relationship between these items. Nonetheless, this process becomes repetitive when the same information has to be entered in both platforms, which again ends up being ignored or even forgotten.

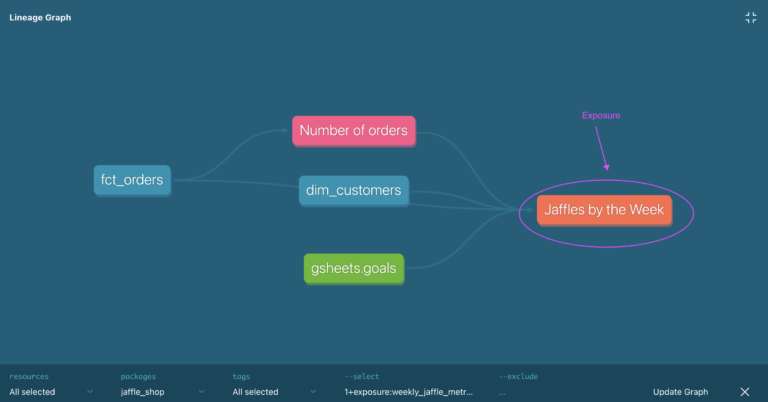

The dbt-metabase package solves this problem by propagating table relationships, model and column descriptions as well as semantic types (e.g. currency, category, URL) from dbt to Metabase data models. Moreover, dbt-metabase also facilitates the extraction of exposures from Metabase which demonstrates how dbt models are exposed in BI, which closes the loop between ELT, modeling, and consumption.

In a production environment, exposures can be graphically seen as the lineage upon ‘dbt docs generate’ after the dbt-metabase exposures command. This makes dbt docs a useful utility for introspecting the data model from source consumption with zero extra/repeated human input.

Using the package

The ‘how-to’ document is somewhat technical, and it can take a few trial-and-error iterations before you get the dbt-metabase synchronization to come to fruition. Below we highlight the challenges we came across, as well as the solutions that allowed the successful application of the package.

- 1.1 – set up the config file first

The easiest approach was to set up a dbt-metabase config file first and only then run the main commands (i.e., dbt-metabase models/exposures). Using the dbt-metabase config command, you can enter an interactive configuration session where you can cache default selections for arguments. This creates a config.yml in ~/.dbt-metabase. This is particularly useful for arguments which are repeated on every invocation like metabase_user, metabase_host, metabase_password, dbt_manifest_path, etc. - 1.2 – Metabase database name

Another important note to remember while setting up the config file, is that when entering ‘metabase database name’, this should be the name given to your database within metabase (see picture below) and NOT the name used in the source such as ‘RAW’ or ‘REPORTING’ (unless you named your metabase database as such). In the picture below, you can see where you can find the metabase database name.

- 2 – save the exposures file in the Model folder

Ensure the yml file created to store exposures is saved in the model folder, otherwise dbt will fail to display the exposures upon dbt docs generate command.

Overall, documentation of data architecture is critical for the successful implementation and use of a business intelligence system, and it is an essential part of any BI project. This is why accessible documentation of the process from data source to report is so important, not only to keep track of data but also to easily describe how and where the data is being used. While both dbt and Metabase offer the possibility of describing tables, columns and relationships between these items, this means that valuable resources are being used to document the same work twice. This problem is solved with dbt-metabase, a package that propagates table relationships, model and column descriptions and semantic types (e.g. currency, category, URL) to your Metabase data model and extracts dbt model exposures from Metabase.

Useful links

Dbt: https://www.getdbt.com/

Metabase: https://www.metabase.com/

Dbt-metabase synchronization: https://pypi.org/project/dbt-metabase/